Though Libra has a long way to go before running in production, the Libra testnet was launched on the day of the official announcement of the Libra project.

This testnet is experimental, but is supposed to have the same properties as the mainnet, making application migration from testnet to mainnet straightforward.

So it makes sense to look into Libra with this testnet, assuming that the regulatory challenges will settle one day and Libra will launch its mainnet.

As the nitty-gritty regulatory work has just started to begin for Libra to go into production one day, we can see how ambitious this project really is. It is quite possible that it actually will never be launched at all.

So, we moved our use case a little bit into the future, but thanks to the Libra testnet, we can try it right now! (…with worthless Libra testnet coins of course…)

Working for the Wintermute DAO

As we are far into the future, we can be creative about the payment processes then. So our scenario is this:

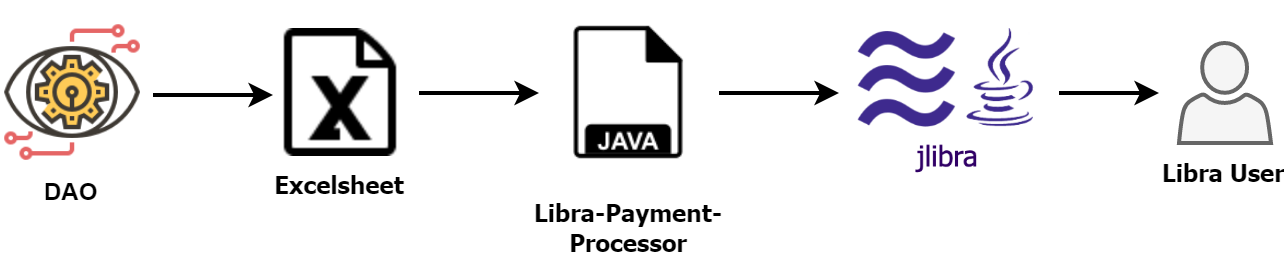

Henry and his hacker buddies are working for the Wintermute DAO. They are not employed (employment these days is only possible for goverment jobs), but working based on a contract they signed with the Wintermute DAO, an AI representing a decentrally organized corporation and concluding contracts with all kind of external parties.

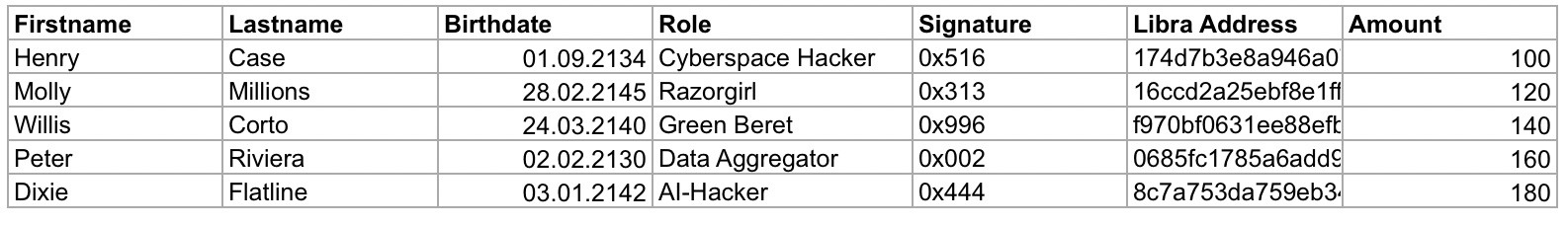

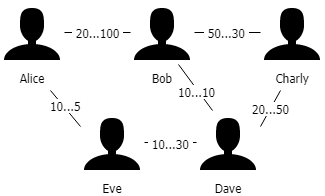

We are not really sure why and how, but Excel actually survived the atomic wars, the zombie apocalypse and Ragnarök and is used by the Wintermute DAO to store the obligations to the contractors:

The DAOs payment processor reads these datasets line by line, extracts the amount and Libra address and transfers the amount. Optionally (see below) the signature is verified to make sure the address actually belongs to the contractor.

Starting the Payroll…

- Start the java-libra-shell

- Create account with mnemonic with command a cwm ‘chaos crash develop error fix laptop logic machine network patch setup void’

- Generated account 0 is Wintermute’s sender account. For each hacker account, add the index to the mnemonic, eg. to create Henry’s account, use a cwm ‘chaos crash develop error fix laptop logic machine network patch setup void’ 1

- Note the account balances and sequence numbers with command q b INDEX, eg. q b 1 for Henry’s account

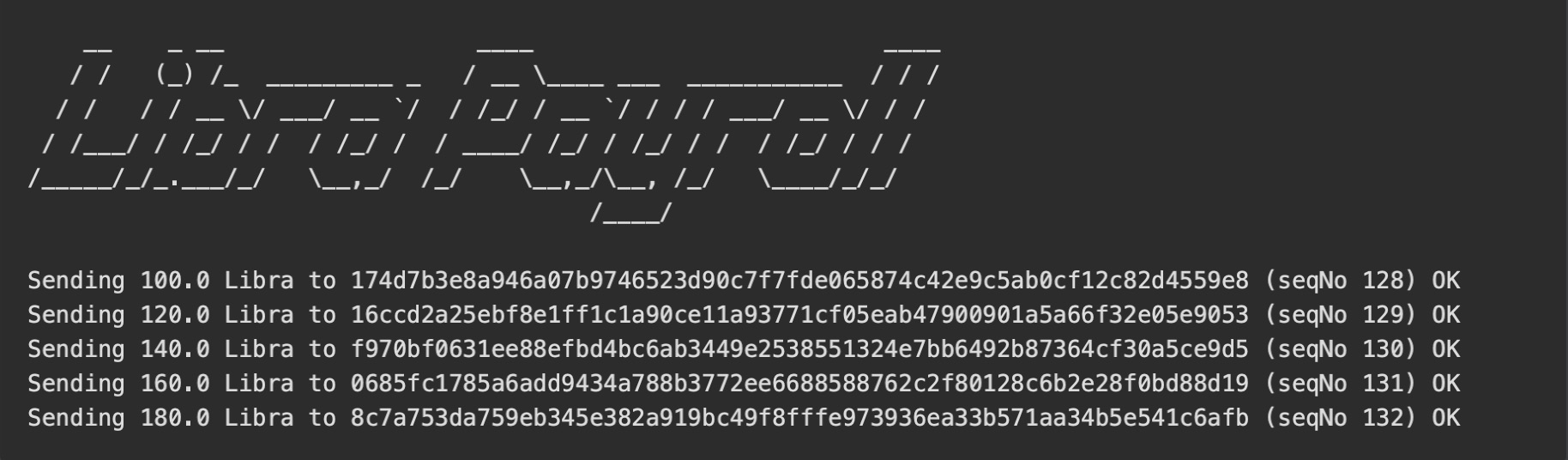

- Run the java-payment-processor

- Note how the balances have now changed, account 0 (the sender account) has 700 Libra less, accounts 1-5 balances have increased with 100, 120, …. and the sequence numbers changed accordingly

Implementing the Payment Process in Java

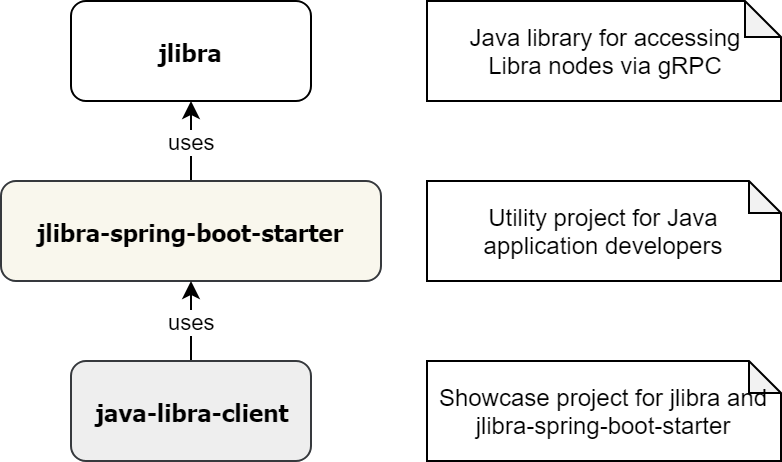

Using Libra from Java is really simple: we have implemented the application using jlibra and the extension jlibra-spring-boot-starter.

As you can see from the repo, there is not much to do:

- Create a pom.xml or build.gradle for your application

- Specify the application.properties for your testnet

- Inject the utility classes: JLibra, Peer2PeerTransaction, QueryAccountBalance, etc.

- Use the pre-configured classes in your application

We implemented all steps necessary for the payment processor in the Main class, the crucial functionality (coin transfer) is implemented in Main.java

@Autowired

private PeerToPeerTransfer peerToPeerTransfer;

@Autowired

private AccountStateQuery accountStateQuery;

@Autowired

private JLibra jLibra;

private long transferLibraToReceiver(String receiverAddress, BigDecimal amount) {

long seqNo = fetchLatestSequenceNumber(KeyUtils.toByteArrayLibraAddress(SENDER_PUBLIC_KEY.getEncoded()));

PeerToPeerTransfer.PeerToPeerTransferReceipt receipt =

peerToPeerTransfer.transferFunds(receiverAddress, amount.longValue() * 1_000_000, ExtKeyUtils.publicKeyFromBytes(SENDER_PUBLIC_KEY.getEncoded()), ExtKeyUtils.privateKeyFromBytes(SENDER_PRIVATE_KEY.getEncoded()), jLibra.getGasUnitPrice(), jLibra.getMaxGasAmount());

System.out.println(" (seqNo " + seqNo + ") " + receipt.getStatus());

return seqNo;

}

That’s all. In our sample application, this works against the testnet with the preconfigured accounts (corresponding to the mnemonic stated in the README).

Real World Use Cases before the year 2525

But how can we use this before the arrival of AI-Hackers and decentralized autonomous organizations? And what if we want to get our salary in EUR and just want to do international payments with Libra?

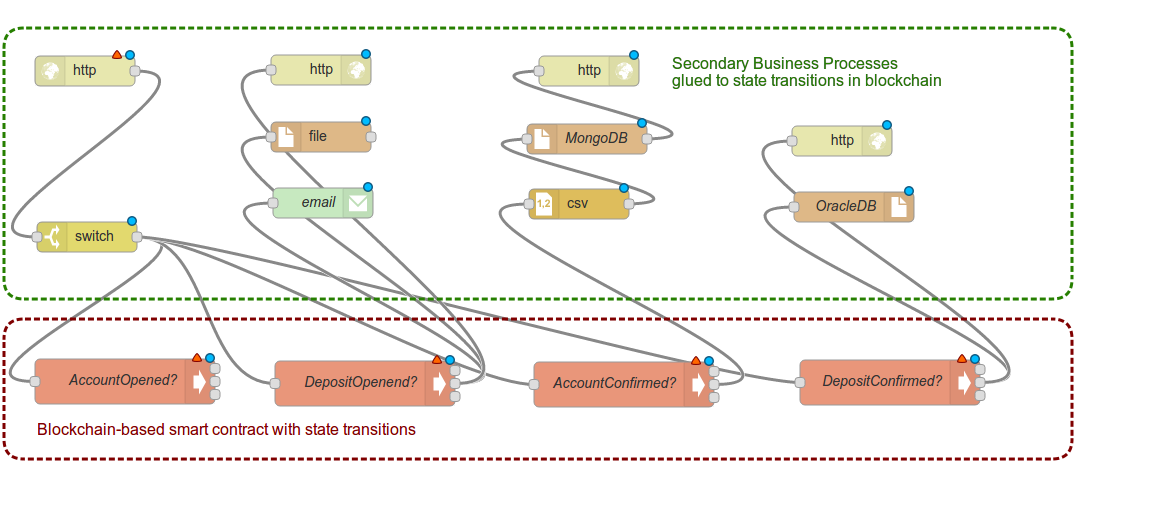

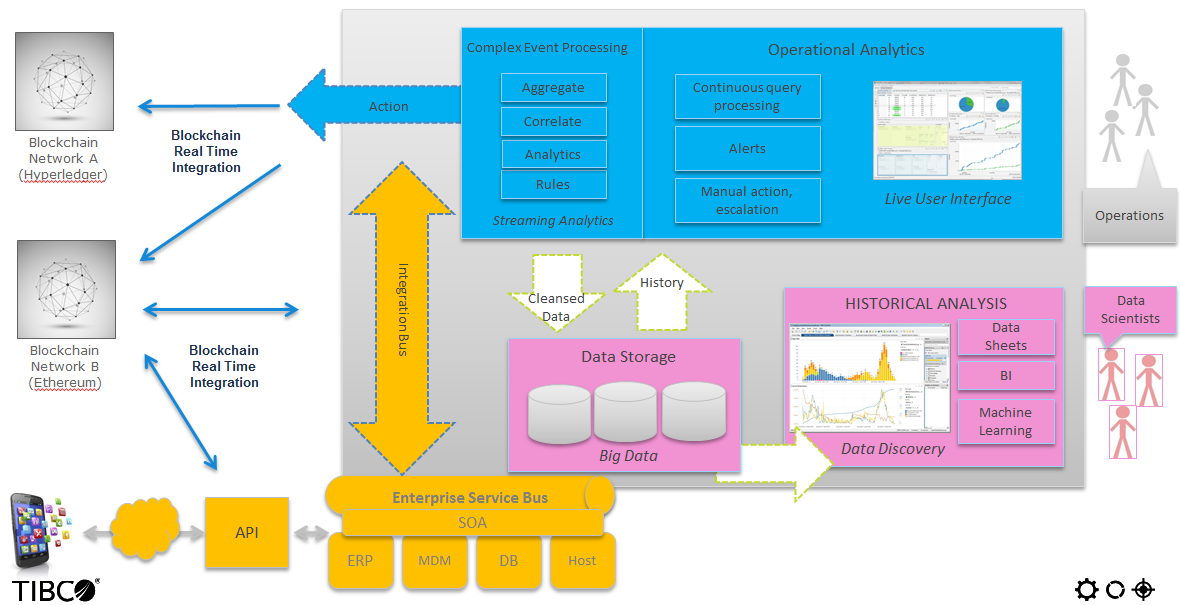

What did we do here? We connected Libra to an existing Java application. This Java application can do whatever Java applications do our days, most likely some enterprisey stuff.

So how about connecting the payment processor of your corporate ERP system? Easy. How about integrating in one of those huge EAI products? Peasy. Workflows, BPNM Engines, Data Science frameworks? Yes, just like that. If it’s accessible from Java (or one language of the other adapters like JavaScript, Python, Go, Rust) it can use Libra.

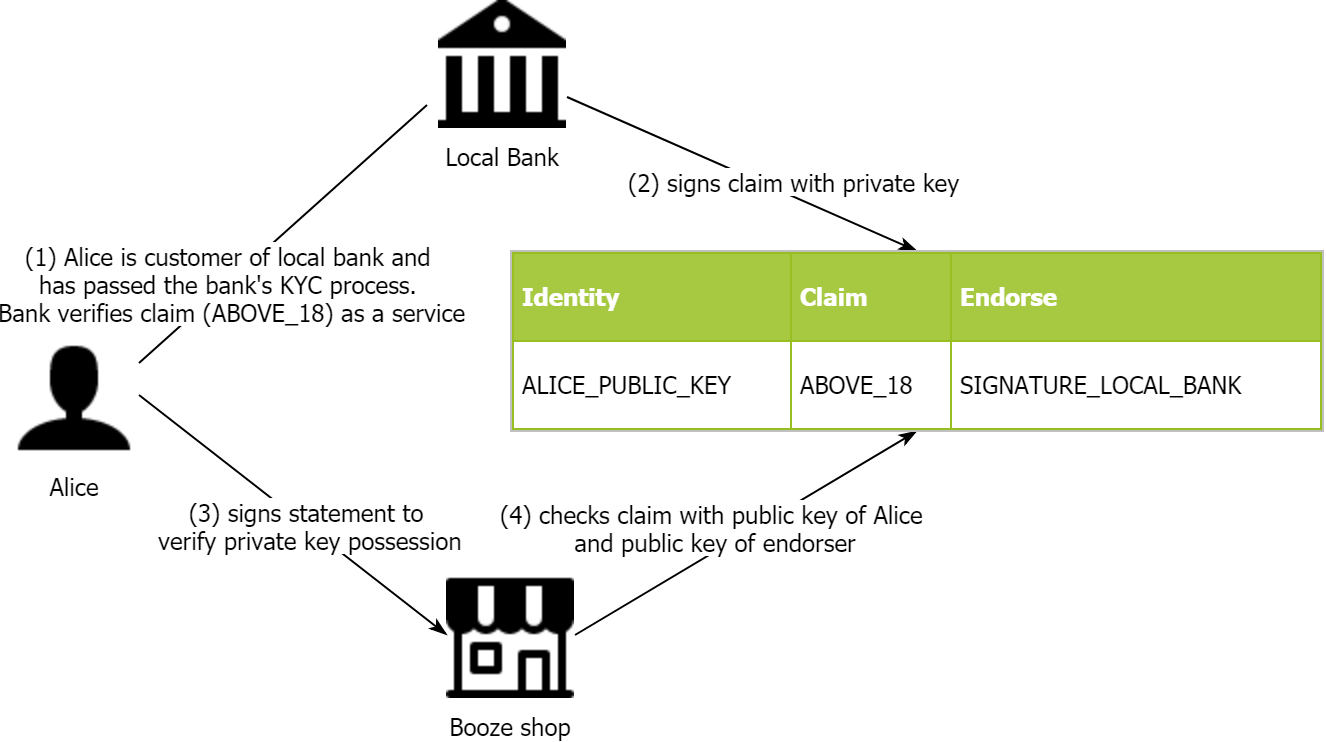

Bonus: Authorizing Transactions

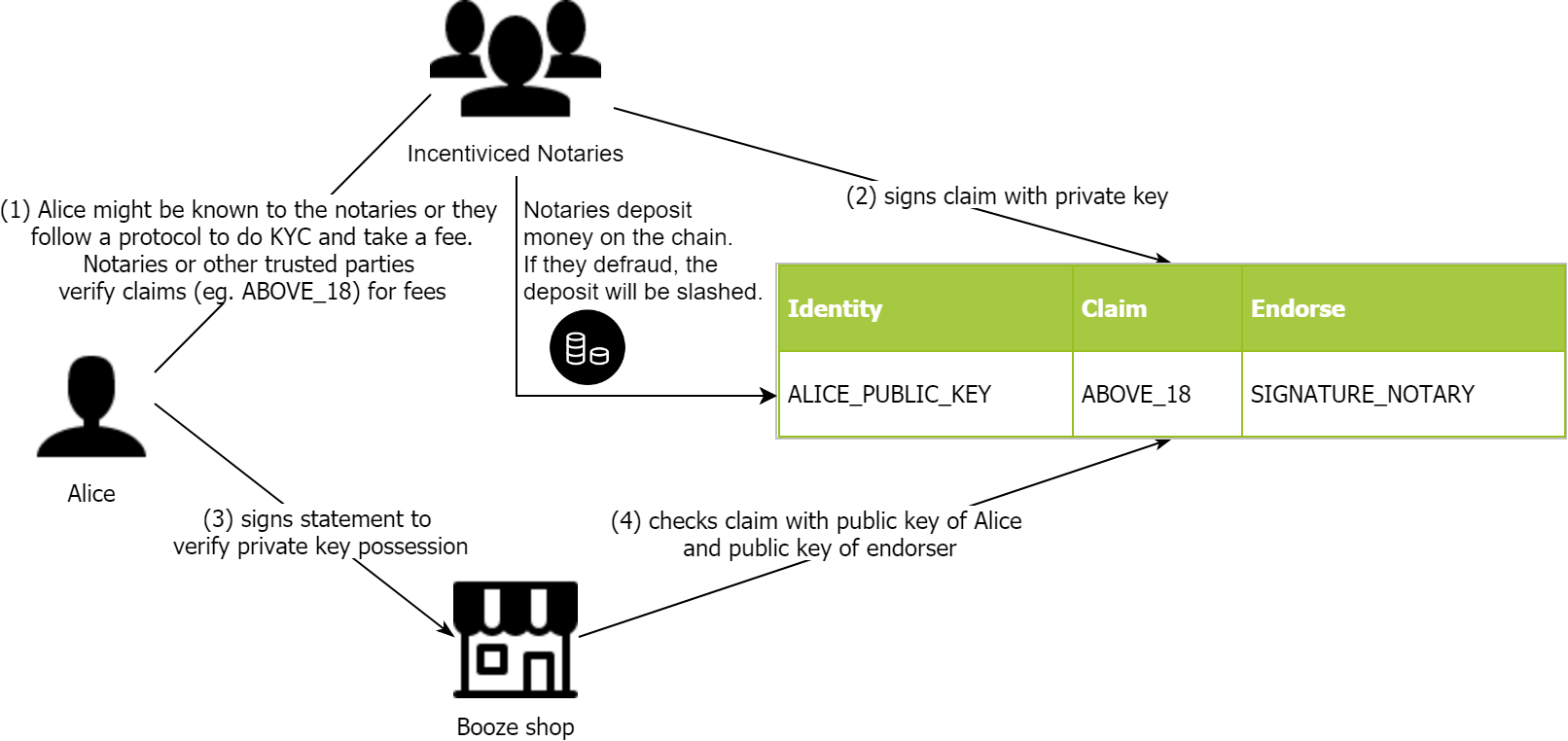

In the old banking world, just giving your account information to the employer was enough. The banks checked all the regulatory stuff and made sure that the right person got the right money – or reverse the transaction if it was not. In the Libra world, that’s not that easy.

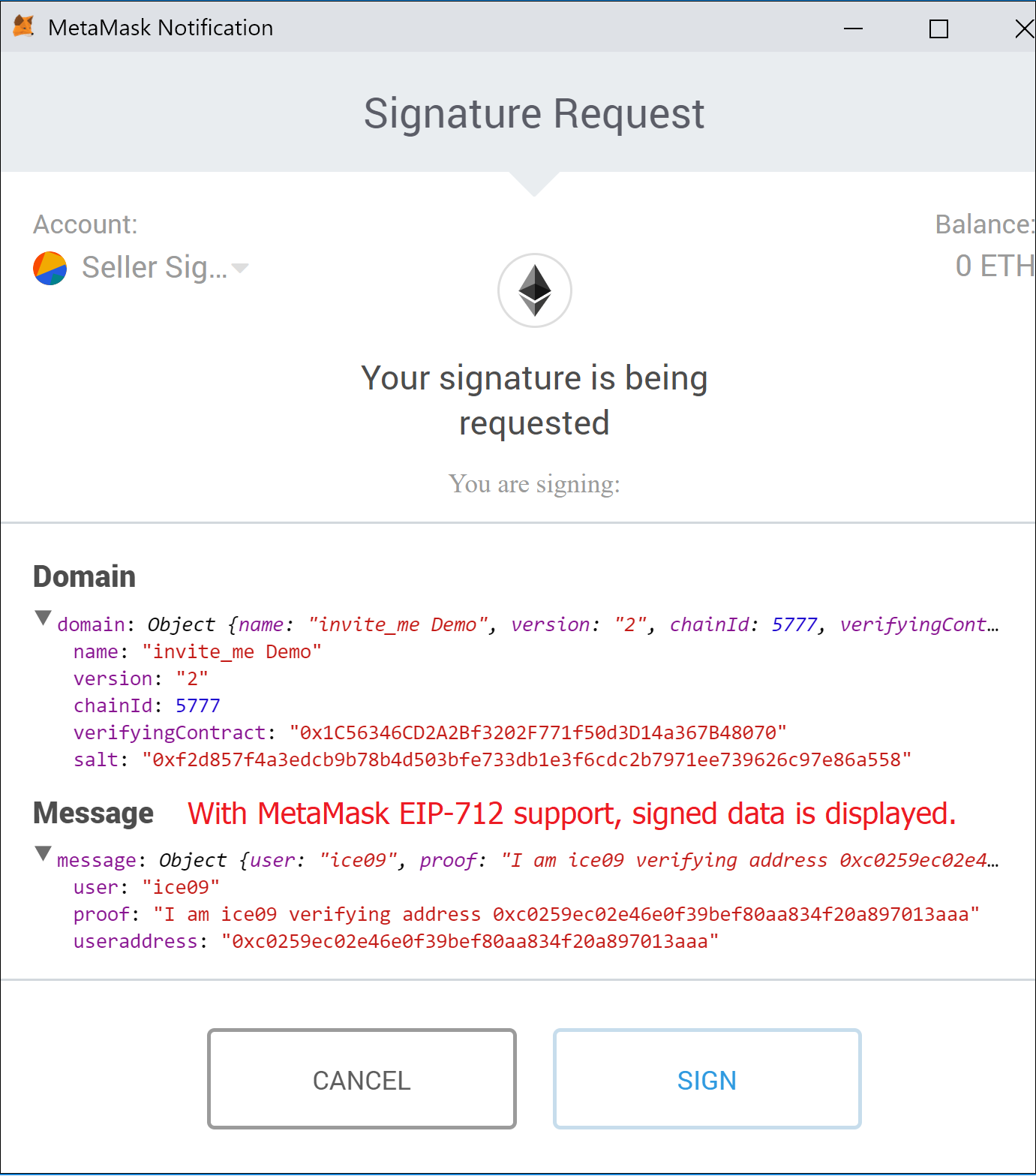

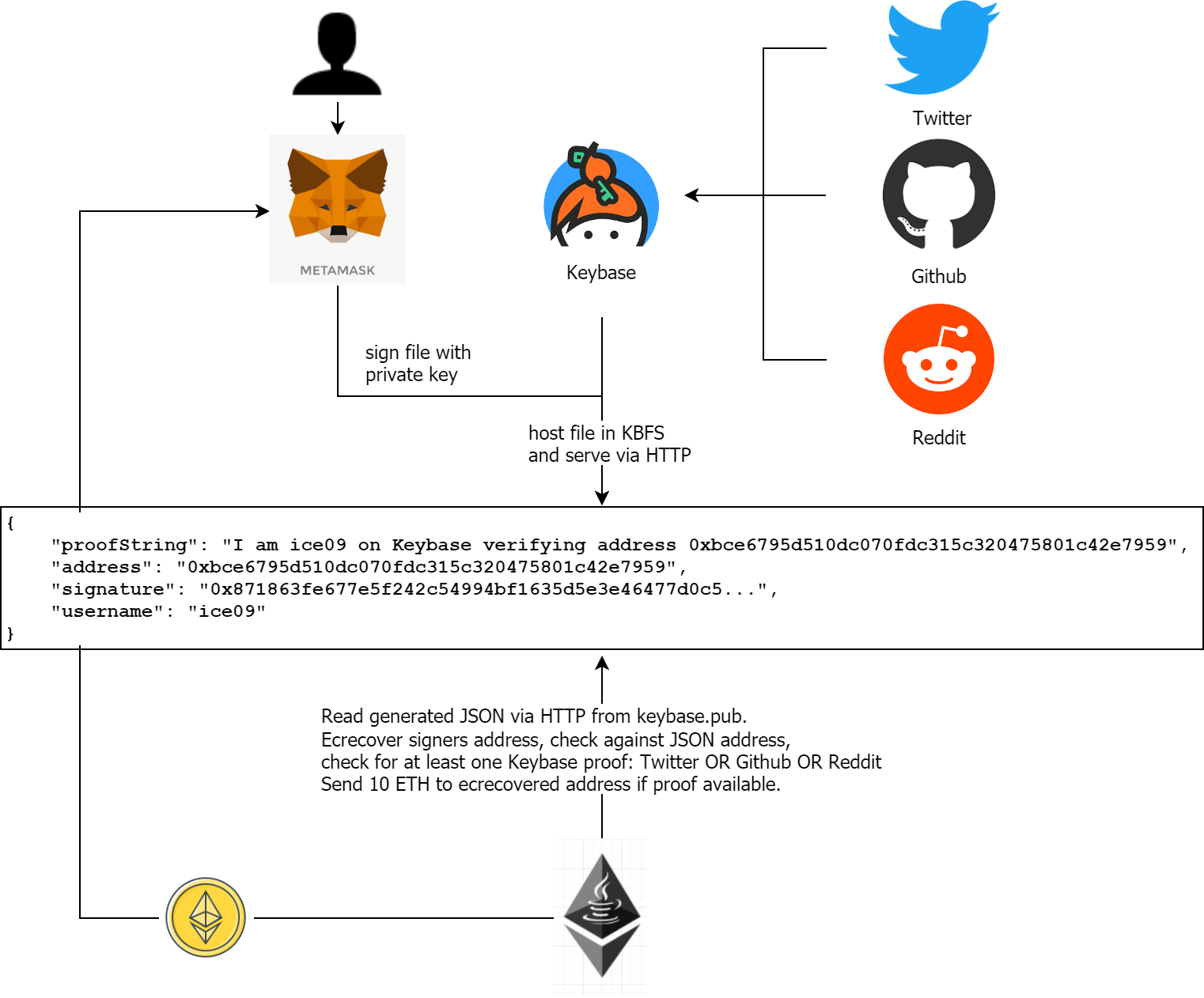

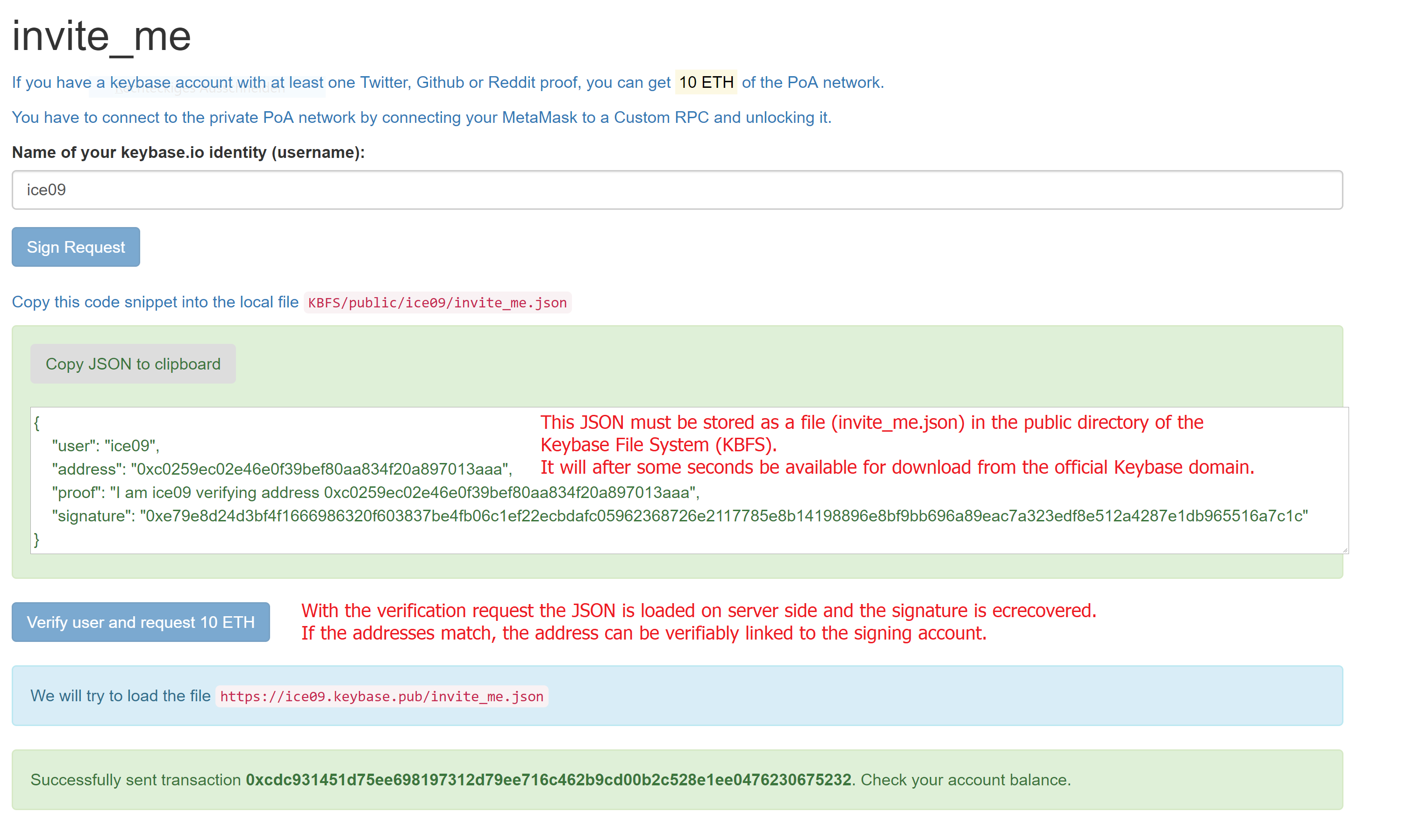

There is a simple way of making sure that the address given is actually belonging to the person authorized to get the payment: signatures. By signing a pre-defined message like

I am working for Wintermute DAO and own Libra address 0x123 which should retrieve my earnings. Signed, Peter on 12/12/2103.

It is easy to link the address to the signature, making sure that the address is correct as nobody could have signed the message without access to the private key of the Libra address.

This way, the payments are arguably more safe than the old way of transfering money, given that “the system” makes sure that the address is checked for AML and is KYCed. This will presumably be true for the “wallet-level” applications in Libra.

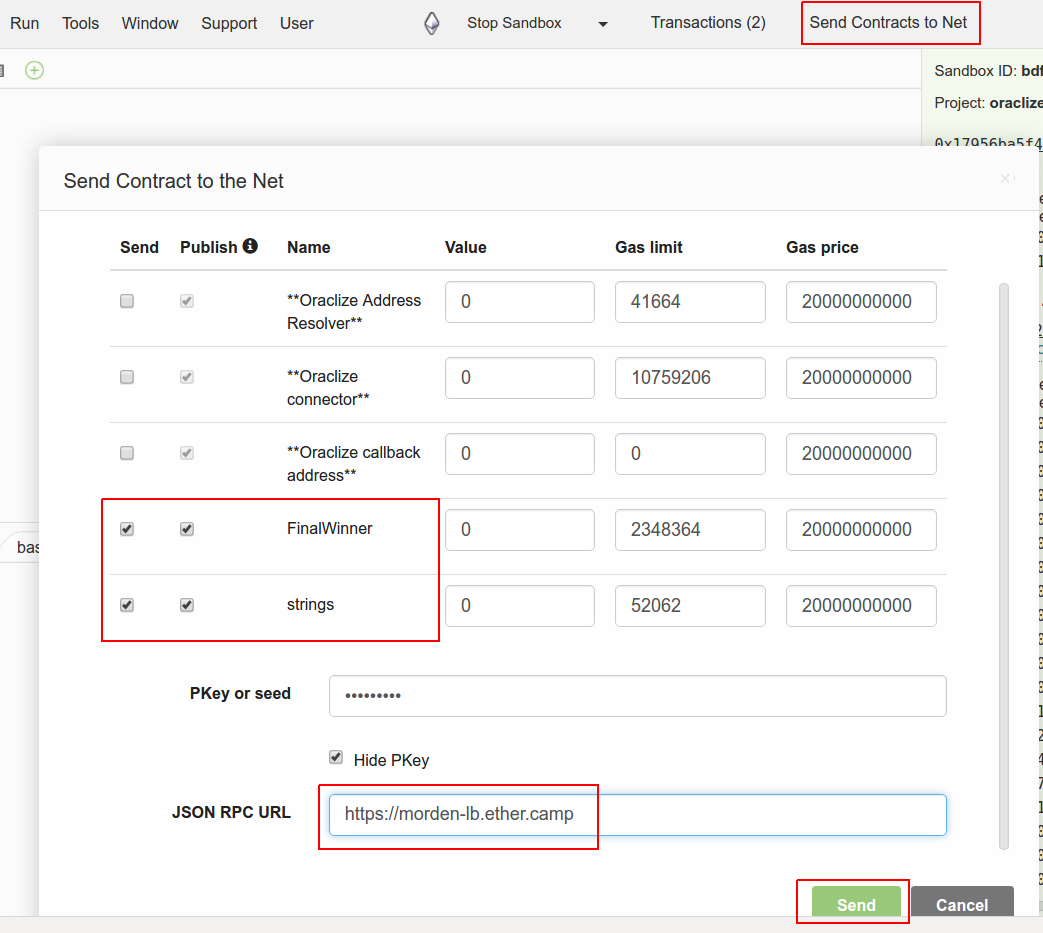

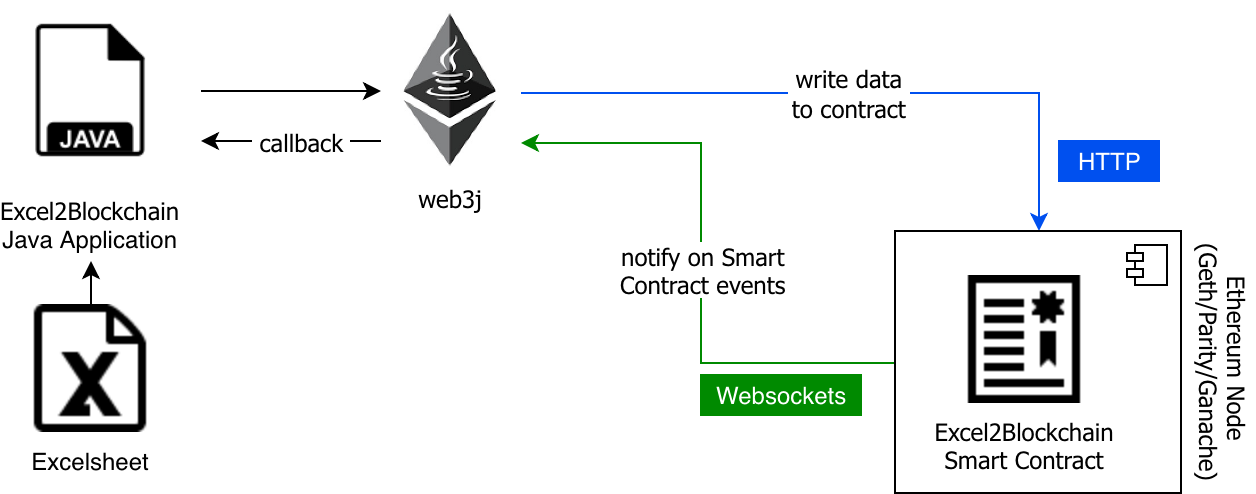

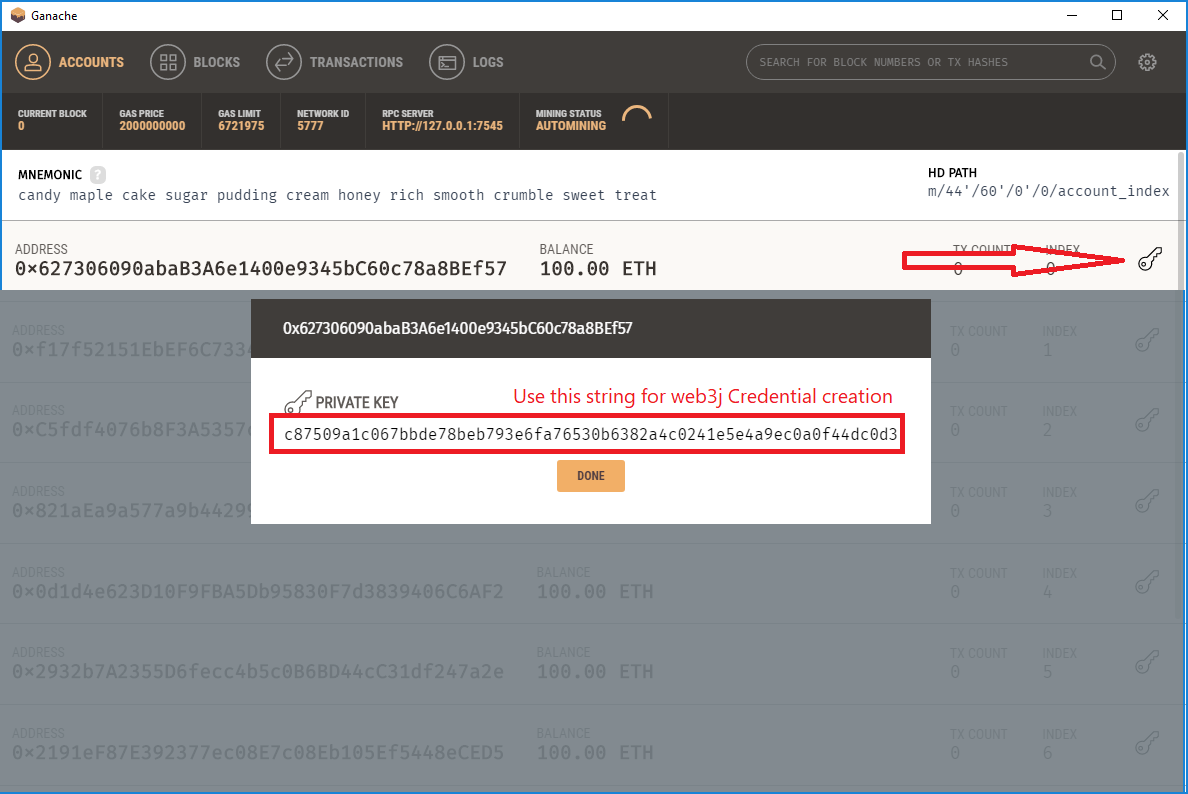

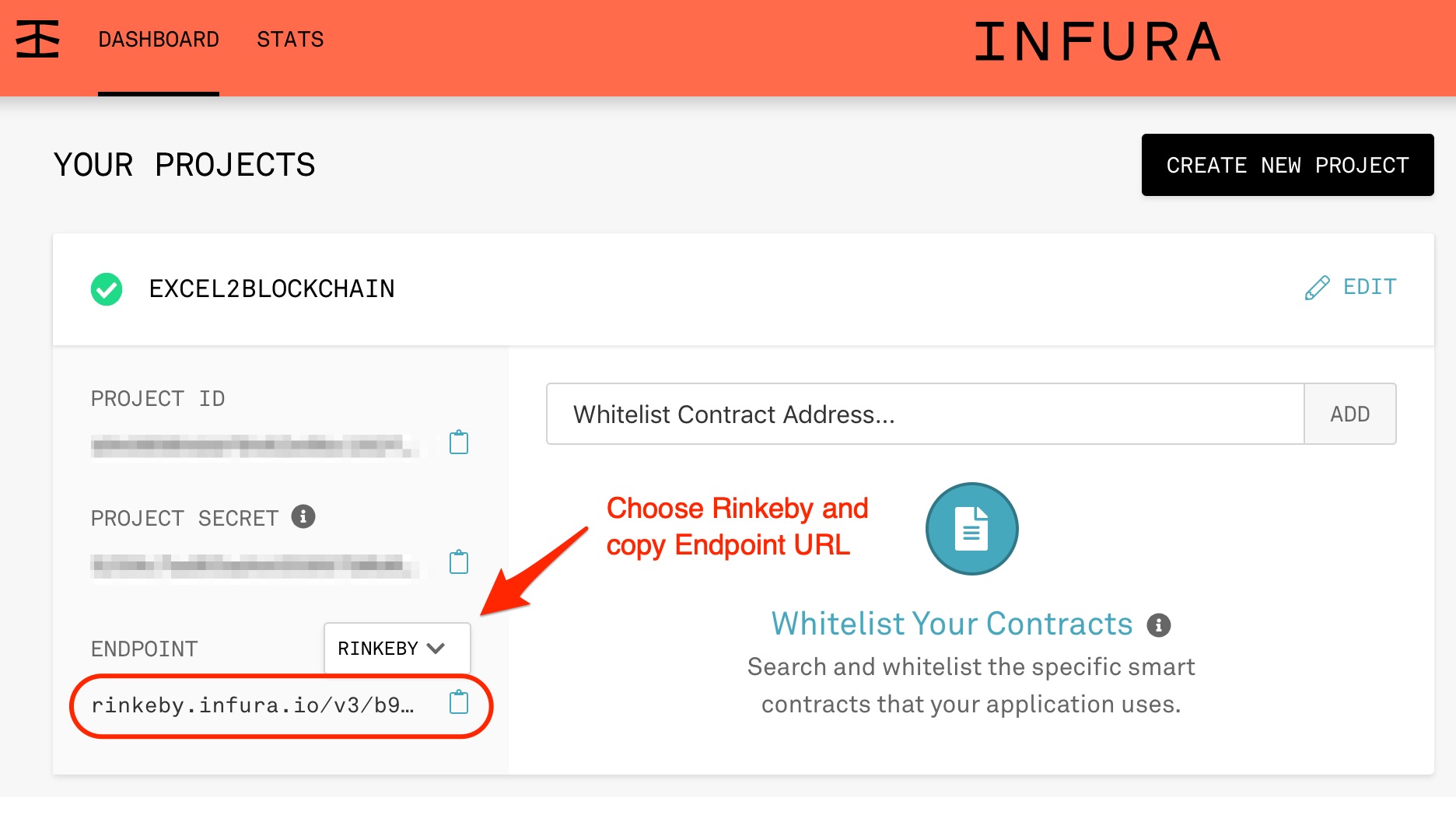

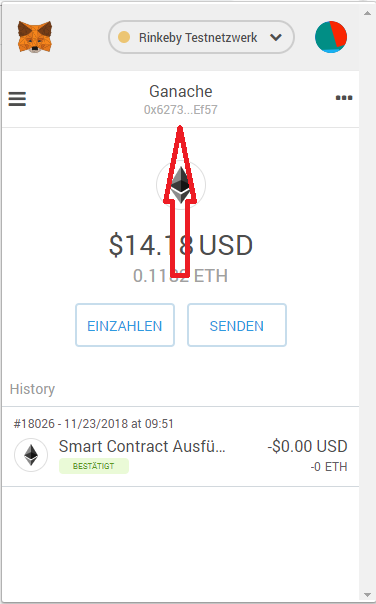

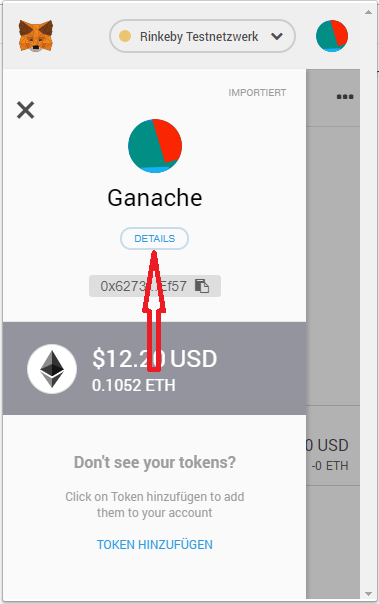

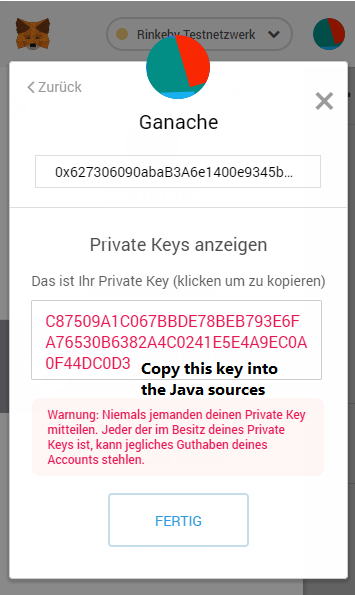

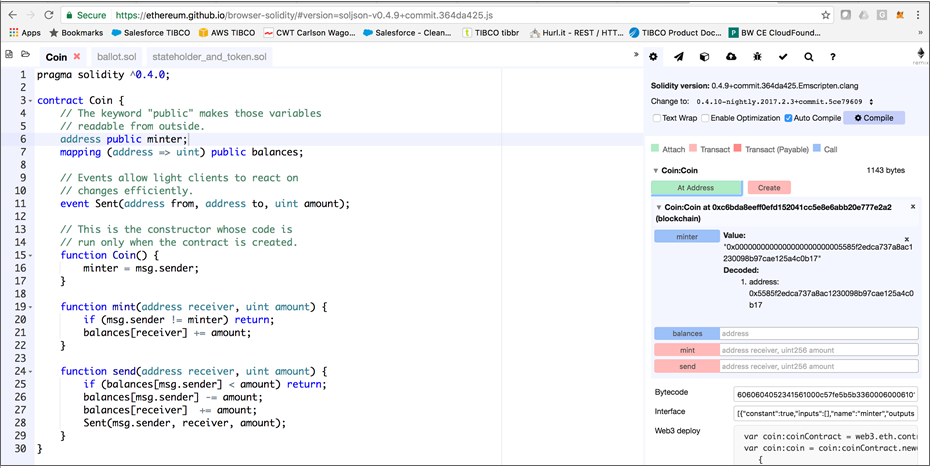

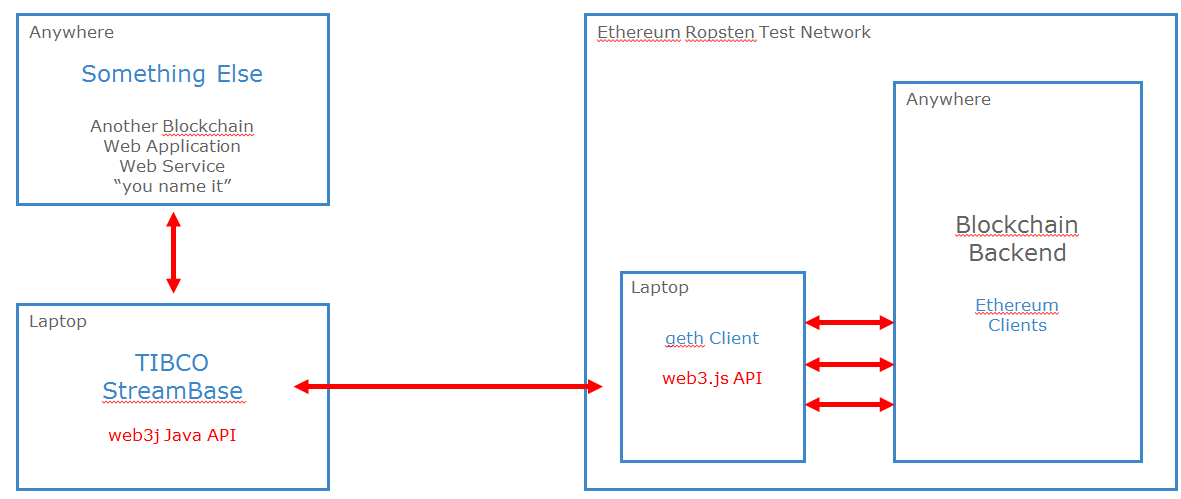

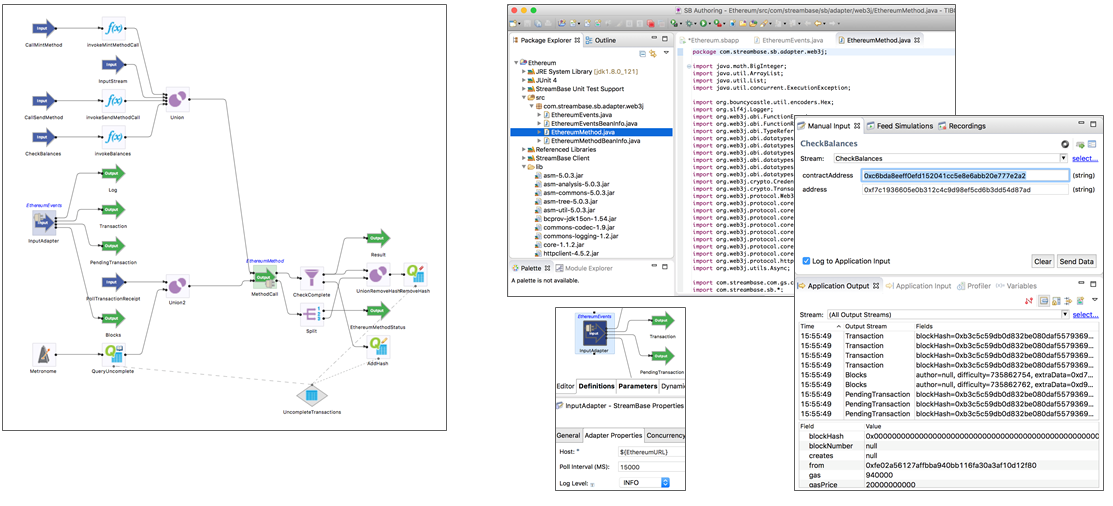

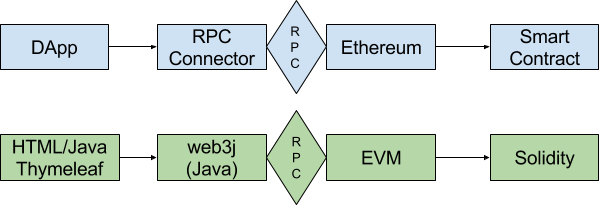

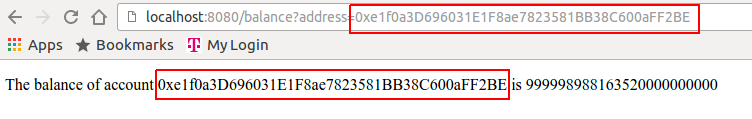

Of course, you can change the Ethereum net like you want in file EthereumController to morden or mainnet, just read the welcome mail from infura.io. Or you can just use a local Ethereum node like geth with RPC enabled (geth –rpc) and http://localhost:8545 as the constructor for HttpService of the Web3j-Factory in EthereumController.

Of course, you can change the Ethereum net like you want in file EthereumController to morden or mainnet, just read the welcome mail from infura.io. Or you can just use a local Ethereum node like geth with RPC enabled (geth –rpc) and http://localhost:8545 as the constructor for HttpService of the Web3j-Factory in EthereumController.